CT-GANs (Contextual Generative Adversarial Networks)

before Diving into CT-GANs 1st let's have a look at what GANs or Generative Adversarial Networks are:

GANs (Generative Adversarial Networks):

GANs are a type of machine learning model that can be used to generate realistic data, for example, images, text, music, etc. GANs work by pitting two neural networks against each other in a zero-sum game.

The first neural network is called the Generator and is responsible for creating new data. the second neural network is called the Discriminator and is responsible for distinguishing between real data and data created by the generator.

overall, Generator creates new data and Discriminator distinguishes between real and fake data.

now let's have a look at what CT-GANs are:

CT-GANs (Contextual Generative Adversarial Networks):

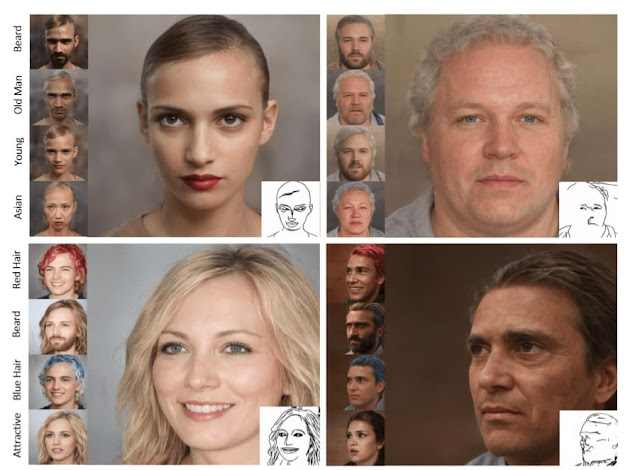

As the name suggests, Contextual GANs are a type of GAN that takes into account the context of data that is being generated. The context can be anything from previous images in a sequence to the text description of an image.

In traditional GANs, the generator and discriminator networks are trained independently of one another. the generator learns to generate fake data and the discriminator learns to distinguish between real and fake data.

In CT-GANs, the context is provided to both the generator and discriminator networks. this will allow the generator to learn to generate data that is consistent with the context and the discriminator learns to distinguish between real and fake data even if the context may differ.

Contents of CT-GANs:

Generator: A neural network that is responsible for generating images. it takes as input a noise vector and a text description, and outputs, an image.

Discriminator: the discriminator is responsible for distinguishing between real images and images generated by the generator. The discriminator takes as input an image, and outputs a probability that the image is real.

text encoder: the text encoder is a neural network that's as input an image, and outputs a probability that the image is real.

loss function: the loss function is used to measure the difference between between the generated image and the real images. the loss function is used to train the generator the discriminator.

Some applications of CT-GANs:

Image Generation: CTGANs can be used to generate images that are consistent with a given context, such as the previous image in a sequence or text description of image.

Text generation: it can be used to generate text that is consistent with a given context, such as the previous sentences in a text or topic of the text.

- The generator is initialized with a random weight.

- The discriminator is also initialized with a random weight.

- A batch of text descriptions and real images is loaded from the training dataset.

- The generator takes the text descriptions as input and generates a batch of images.

- The discriminator takes the generated images and real images as input and outputs a probability for each image that it is real.

- The loss of generator is calculated as the difference between the discriminator's output for the generated images and 1.

- the loss if discriminator is calculated as the difference between the discriminator's output for real images and 0.

- the weights of the generator and discriminator are updated using backpropagation.

- Steps 3-8 are repeated until the generator and discriminator reach a Nash equilibrium.

Comments

Post a Comment