Large Language Models : The Future Of AI

Large language models (LLMs) are a type of artificial intelligence (AI) that are trained on massive datasets of text and code. This allows them to learn the statistical relationships between words and phrases, and to generate text that is both coherent and grammatically correct.

LLMs are still under development, but they have already been used to achieve impressive results in a variety of tasks, including:

- Text classification: LLMs can be trained to classify text into different categories, such as news articles, product reviews, or social media posts. For example, an LLM could be trained to classify news articles as either "positive" or "negative" based on the sentiment of the text.

- Question answering: LLMs can be used to answer questions about text, even if the questions are open ended or challenging. For example, an LLM could be used to answer questions like "What is the capital of France?" or "What is the meaning of life?"

- Document summarization: LLMs can be used to summarize the key points of a document in a concise and informative way. For example, an LLM could be used to summarize a research paper in a few sentences.

- Text generation: LLMs can be used to generate text, such as poems, code, scripts, or musical pieces. For example, an LLM could be used to generate a poem about love or a piece of code that solves a specific problem.

LLMs are a powerful tool that has the potential to revolutionize the way we interact with computers. They could be used to create more natural and intuitive user interfaces, to generate personalized content, and to automate tasks that are currently done by humans.

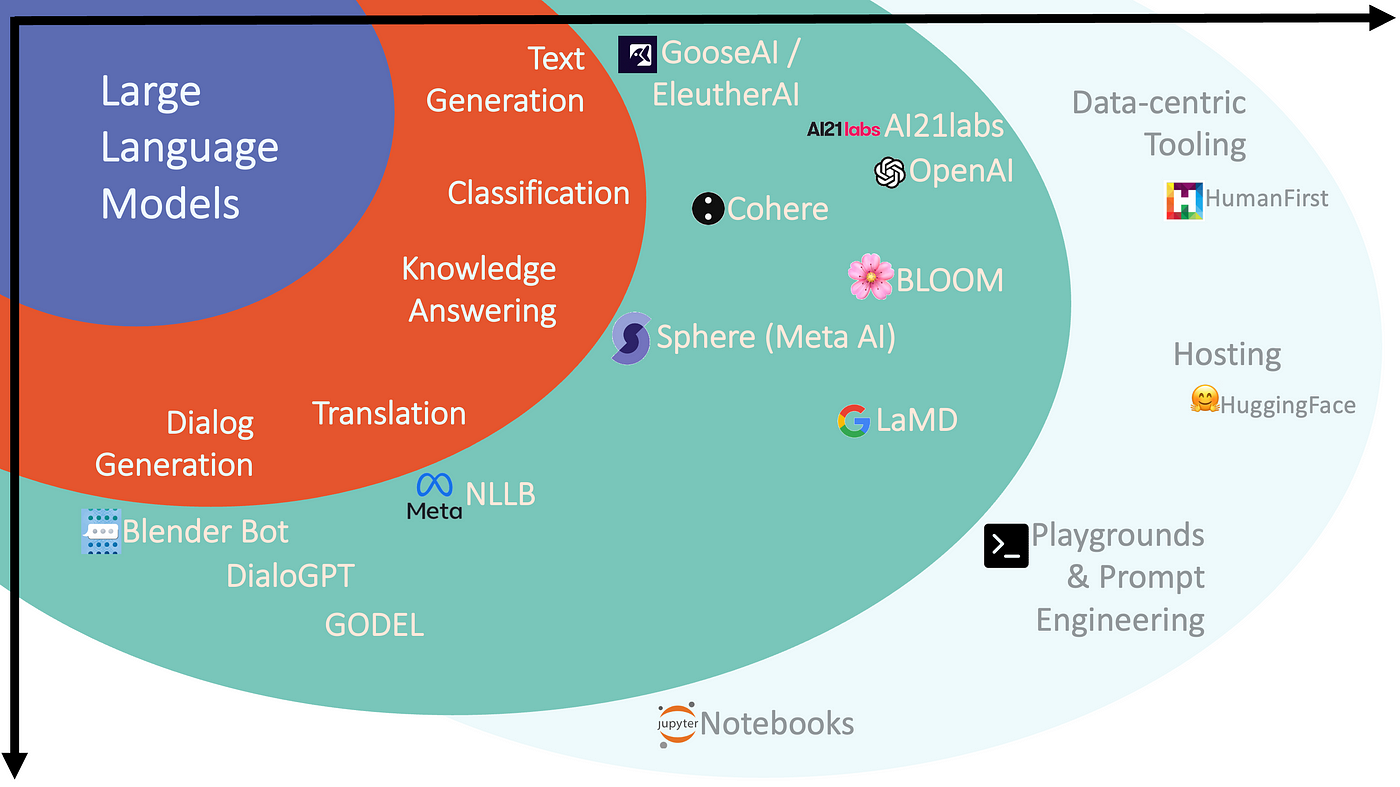

What are the different types of LLMs?

There are three main types of LLMs:

- Generic language models: These models are trained on a wide variety of text data, and can be used for a variety of tasks. For example, the GPT-3 language model from OpenAI is a generic language model that has been trained on a massive dataset of text and code.

- Instruction-tuned language models: These models are trained on a specific set of instructions, and can be used to perform specific tasks, such as answering questions or generating text. For example, the LaMDA language model from Google is an instruction-tuned language model that has been trained to answer questions about factual topics.

- Dialog-tuned language models: These models are trained on a set of dialogues, and can be used to hold conversations with humans. For example, the XiaoIce language model from Microsoft is a dialog-tuned language model that has been trained to hold conversations with humans in a natural and engaging way.

How are LLMs trained?

LLMs are trained using a process called self-supervised learning. In self-supervised learning, the model is not given any labels for the data it is trained on. Instead, the model is asked to predict the next word in a sentence, given the surrounding context. This process helps the model to learn the statistical relationships between words and phrases.

How are LLMs used?

LLMs are used in a variety of applications, including:

- Natural language processing: LLMs can be used to understand and process natural language, such as text and speech. For example, LLMs can be used to translate text from one language to another or to answer questions about text.

- Machine translation: LLMs can be used to translate text from one language to another. For example, the Google Translate service uses LLMs to translate text between over 100 languages.

- Question answering: LLMs can be used to answer questions about text, even if the questions are open ended or challenging. For example, the Google Search service uses LLMs to answer questions that are asked in natural language.

- Text generation: LLMs can be used to generate text, such as poems, code, scripts, or musical pieces. For example, the OpenAI GPT-3 language model has been used to generate realistic-looking news articles and code.

- Chatbots: LLMs can be used to create chatbots that can hold conversations with humans. For example, the XiaoIce chatbot from Microsoft is powered by an LLM that can hold conversations with humans in a natural and engaging way.

The future of LLMs

LLMs are still under development, but they have the potential to revolutionize the way we interact with computers. They could be used to create more natural and intuitive user interfaces, to generate personalized content, and to automate tasks that are currently done by humans.

As LLMs continue to develop, they are likely to become more powerful and versatile. They could be used to solve a wide variety of problems, and to create new and innovative applications.

The potential applications of LLMs are endless. As LLMs continue to develop, they are likely to have a profound impact on the way we live and work.

Architecture of Large Language Models (LLMs)

LLMs are typically based on a neural network architecture called a transformer. Transformers are a type of neural network that are well-suited for natural language processing tasks. They work by learning the relationships between words and phrases in a sentence, and by using this information to predict the next word in a sequence.

The transformer architecture is composed of a stack of self-attention layers. Each self-attention layer takes a sequence of tokens as input and produces a sequence of output tokens. The self-attention layers are responsible for learning the relationships between the words in a sentence.

In addition to the transformer architecture, LLMs also typically use a technique called pre-training. Pre-training is a process of training the model on a large dataset of text and code. This allows the model to learn the statistical relationships between words and phrases, and to generate text that is both coherent and grammatically correct.

After the model is pre-trained, it can be fine-tuned on a specific task. Fine-tuning is a process of adjusting the model's parameters to improve its performance on the specific task. For example, an LLM that has been pre-trained on a massive dataset of text can be fine-tuned to answer questions about factual topics.

The architecture of LLMs is constantly evolving as researchers develop new techniques to improve their performance. However, transformer architecture and pre-training are two of the most important concepts in the design of LLMs.

Here are some of the challenges in the architecture of LLMs:

- Data requirements: LLMs require a massive amount of data to train. This can be a challenge, as it can be difficult to collect and annotate large datasets of text and code.

- Computational requirements: LLMs are computationally expensive to train and use. This can be a challenge, as it requires powerful hardware and software infrastructure.

- Bias: LLMs can be biased, as they are trained on data that is created by humans. This can lead to problems, such as LLMs generating text that is offensive or discriminatory.

- Safety: LLMs can be used to generate harmful content, such as fake news or hate speech. This is a serious concern, and it is important to develop safeguards to prevent LLMs from being used for malicious purposes.

Despite these challenges, LLMs are a powerful tool that has the potential to revolutionize the way we interact with computers. As LLMs continue to develop, they are likely to become more powerful and versatile, and to be used in a wider range of applications.

Comments

Post a Comment