Building Responsible A.I.: Navigating Issues, Factors, and Ethical Guidelines

Responsible A.I.

In the realm of technological advancement, the development of artificial intelligence (A.I.) has emerged as a groundbreaking frontier. However, this remarkable progress comes hand in hand with the pressing need for responsible A.I. development. Crafting intelligent systems that contribute positively to society necessitates a deep understanding of potential pitfalls, limitations, and unforeseen consequences.

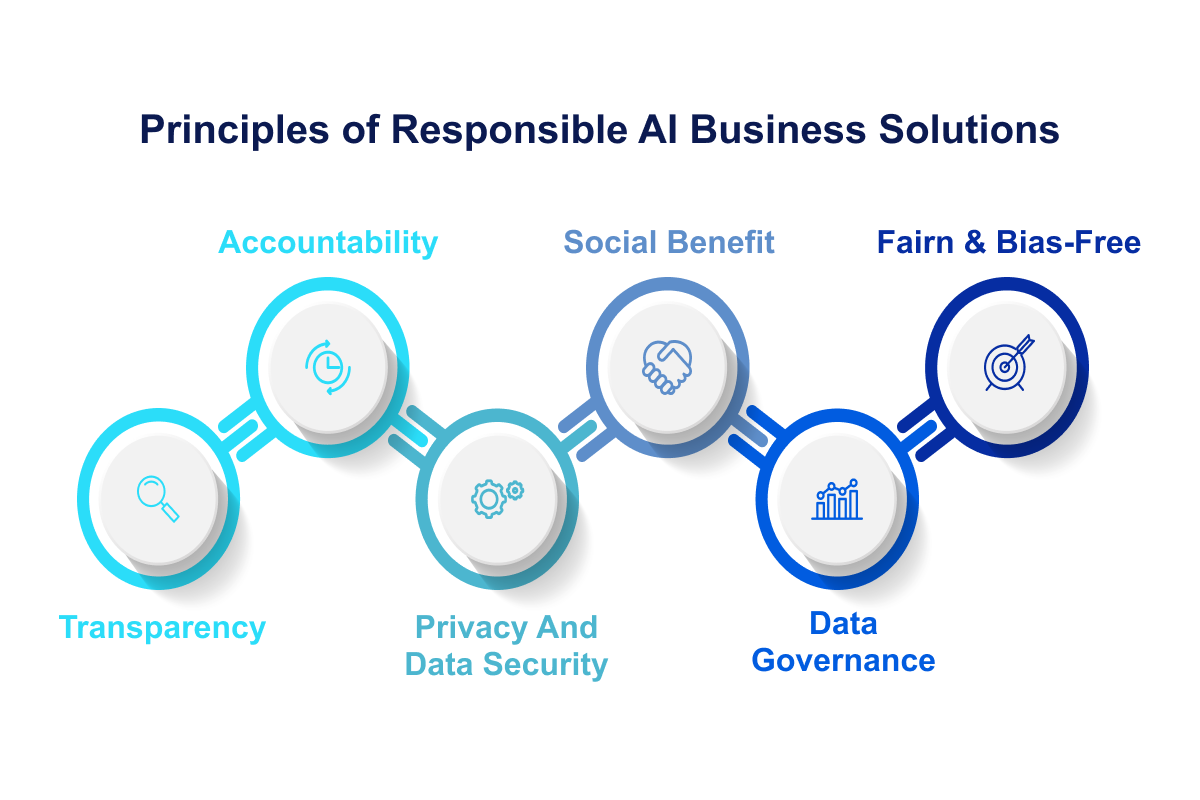

Understanding Responsible A.I.: The Cornerstones

The journey toward responsible A.I. hinges on a foundation of several core factors, each playing a pivotal role in shaping the ethical landscape of artificial intelligence.

Transparency (Transparency Matters):

Transparency in A.I. development signifies the openness to disclose methodologies, data sources, and decision-making processes. This principle helps mitigate opacity and fosters trust among users. By understanding how A.I. reaches its conclusions, stakeholders can gain valuable insights into its workings.

Accountability (For Every Line of Code):

Accountability holds paramount importance in the A.I. ecosystem. Developers and stakeholders must acknowledge the responsibility they bear for the actions of A.I. systems they create. Being accountable ensures that A.I. technologies align with ethical standards and minimize negative impacts.

Fairness (No One Left Behind):

Equity and fairness drive the development of A.I. systems that do not discriminate based on race, gender, or any other characteristics. By actively avoiding biases, developers can foster a more inclusive and equitable environment for all users.

Privacy (Guardian of Data):

Respecting privacy is essential in the age of data. Responsible A.I. systems are built to respect user privacy, ensuring that sensitive information remains secure and protected from unauthorized access.

Elements of a Responsible A.I.: Guiding Principles

A responsible A.I. goes beyond avoiding pitfalls and actively embraces key principles to create systems that positively impact society:

Built for Everyone:

A responsible A.I. is accessible to all, ensuring that its benefits are not limited to a specific demographic or group. It strives to address a wide range of societal challenges and cater to diverse user needs.

Accountable & Safe:

Safety is a non-negotiable element. Responsible A.I. development includes thorough testing and rigorous safety measures to prevent undesirable consequences. Developers hold themselves accountable for the outcomes of their creations.

Respect Privacy:

User data is sacred. A responsible A.I. upholds privacy by adopting robust data protection mechanisms and maintaining transparency in data collection and usage practices.

Driven by Scientific Excellence:

Science forms the bedrock of responsible A.I. A commitment to scientific excellence ensures that A.I. systems are grounded in sound research, verifiable methodologies, and reliable results.

Google's A.I. Principles: A Guiding Light

Leading the charge in responsible A.I. development, Google has established a set of principles that guide their approach:

Social Benefit:

Google's A.I. endeavors prioritize creating technologies that bring about positive changes to society, ranging from healthcare innovations to environmental solutions.

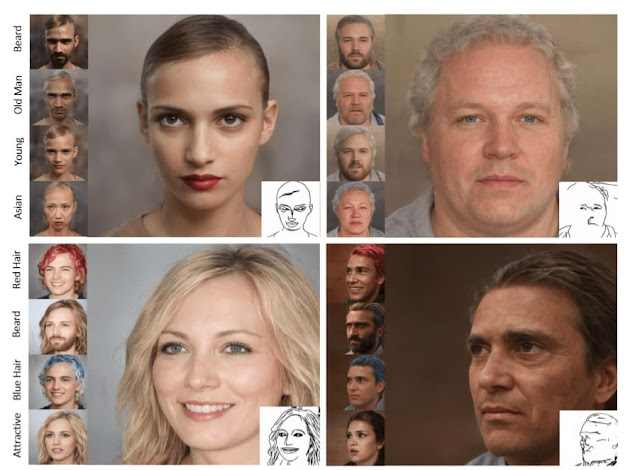

Avoiding Bias:

The avoidance of unfair biases is a central tenet. Google strives to create A.I. systems that are impartial, irrespective of cultural, racial, or gender factors.

Safety First:

Safety is paramount. Rigorous testing ensures that Google's A.I. technologies do not pose risks to users or society at large.

Accountability for People:

A.I. systems should serve people's needs. Google's commitment to accountability ensures that A.I. technologies are designed to enhance human lives responsibly.

Privacy as Priority:

Google incorporates privacy considerations into every A.I. endeavor. This ensures that user data remains confidential and protected.

Scientific Excellence:

Scientific rigor is integral to Google's A.I. pursuits, guaranteeing that their technologies are credible and validated through solid research.

Ethical Deployment:

Google commits to making A.I. technologies available only for purposes that align with the principles of social benefit, fairness, and accountability.

In the world of A.I., each step is laden with the weight of responsibility. Understanding potential pitfalls, adhering to guiding principles, and adopting ethical frameworks is the compass that will lead us toward a future where A.I. innovations are not only groundbreaking but also ethically sound, beneficial, and responsible.

Comments

Post a Comment